Investors and policy makers often need to know how far the programmes they are financing or implementing are having the intended impact. In this blog KS Aditya and SP Subash take us through the different methods of impact assessment and explain ‘why’ and ‘when’ to use these.

BACKGROUND

This blog is an honest attempt to explain the basic concepts of Impact Assessment in a language that Homo sapiens can understand and not just Homo economicus (Box 1). In a nutshell, we will be trying to convince you that scary methodologies used (sometimes many of us might have not even heard of these) and econometric juggleries that we employ in assessing impact is perfectly justified. However, a word of caution before you read any further, if you expect this blog to give you the ‘best method to assess impact’ we are very sorry to disappoint you – there is no ‘gold standard’ method, best fit for all cases. Our aim is just to introduce you to the different methods of impact assessment, and more importantly, to tell you why and when to use them, and also when not to use a particular method!

WHY IMPACT ASSESSMENT?

“In God we trust, rest bring data” – Edward Deming

Policy makers need scientific and reliable estimates of how effective a programme or intervention (technology) is. Even the most promising projects might fail to generate the expected impacts. So, policy makers need to know how far the programmes are generating the intended impact. This equips them to take calls on reorienting the programmes as well as in allocating funds. In this line Impact Assessment is an effort to understand whether the impacts of a programme (Net welfare gain – only for readers who belong to species Homo economicus) are attributed to the programme and not to some other causes. Ultimately, the aim of Impact Assessment is to establish the causal link between the programme and the impact, and to arrive at reliable estimate of the ‘size of impact’.

Let us take one case, where a new programme is launched to increase the income of beneficiaries. After a few years, the government wants to know the impact of the programme. One common and very popular approach is to collect data from a few beneficiaries (treatment) and non-beneficiaries (control) and estimate the difference in income between the two groups as impact. However, the difference in mean income between the two groups cannot be called impact, as we haven’t yet established ‘causation’- how can we say that the difference in income is only due to the programme and not due to other factors? How then to assess impact?

ATTRIBUTING IMPACT

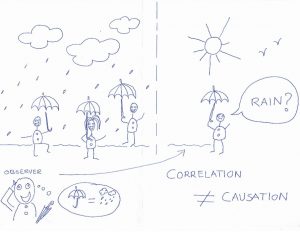

In lab and field experiments carried out by biological scientists, three principles are used to establish causation: replication, randomization, and local controls. Randomization makes sure that the unobservable characters remain the same across treated and control groups. Local control ensures that all the variables, except for the treatment, are same across treatment and control. For example, if the purpose of the experiment is to know the effect of organic manure on crop yield, all other factors like variety, soil type, seed rate, chemical fertilizers, date of sowing, etc., must remain the same across treatment group and control group. If the only difference between treatment and control group is organic manure, we can safely say that increase (or decrease) in yield is due to manure use. To sum up, local control and randomization in case of experiments ensure that the treated and control groups are similar, which enable us to make ‘causal claims’ by simply taking the mean difference across groups (for which you need to test statistical significance).

Source: Illustrated by authors based on Doug Neill’s work (read further https://commons.trincoll.edu/cssp/2013/12/09/10886/)

Let us shift our focus back to social science research. Mostly we do research based on ‘observational data’; we collect data from observations (samples) where the researcher has no control over the variables unlike an experiment. So, in most cases, the treated and control groups are not similar with respect to many variables and the difference in outcome variable (Income for example) cannot be attributed to treatment (Programme or intervention) and would result in bias (Bias can be considered as a cousin of error!). More specifically, bias in estimate of impact arising due to the pre-treatment difference in covariates is called ‘Selection Bias’. For example, let us say that we would like to know the impact of rice seed treatment on farmer’s income. The usual research design would be to collect income and other data from both adopters and non-adopters. However, as per theoretical expectations, adopters of a technology are more motivated, have better education and extension contact compared to non-adopters. So, we cannot say that higher income of adopters is only due to seed treatment as it could also be due to pre-treatment differences in education, motivation, extension contact, etc. We can say that ‘difference in income across treatment and control’ as an estimate of impact suffers from ‘sample selection bias’.

You might wonder if there are cases where there can be no sample selection bias. Yes, if the beneficiaries for a programme is selected ‘at random’ then, by definition, the beneficiary group and non-beneficiary group would be similar on average (please note that we are using the term ‘similar’, not ‘same’). Also, the two groups will be similar on average, you can’t expect each person in the beneficiary group to be similar to each person in the non-beneficiary group (on different variables). In this case, simple difference of means across treatment and control can be treated as impact and hence we say ‘randomness is economists’ best friend’. Unfortunately, in most programmes, the selection of beneficiaries is based on some observed characters and not a random assignment (except for one or two programmes in the world, like conditional cash transfer scheme for improving school enrolment in Mexico known as ‘Progressa’). It is because few programmes are targeted for a specific target group (like people below poverty line or small farmers) where random assignment is impossible by design. In the case of other programmes, random allotment is simply not practical on a large scale due to socio-political factors.

Isn’t sample selection bias due to sampling error? Definitely not. Let me try to convince you that ‘random sampling’ cannot cure selection bias. Selection bias arises due to pre-treatment differences in beneficiary and non-beneficiary groups with respect to some variables, say education and land holding size, for our convenience. Let us assume that the beneficiaries of the programme are mostly large farmers and well-educated farmers. So, when you take random sample, it is quite obvious that most of the beneficiaries are large farmers and well-educated and vice versa with non-beneficiaries, so random sampling cannot eliminate selection bias. The point we want to make is that random sampling is not the solution for selection bias. However, we acknowledge the importance of random sampling in social science research.

If selection bias is the problem, then why not take the value of outcome variable before implementing the programme as a baseline, and take a second measurement after implementation? Will the difference between the value of outcome variable after the programme and before programme become a measure of impact? Sadly ‘no’. The outcome variable is measured at two different periods of time and in between many things might have changed. We cannot attribute the effect only to the programme and causation cannot be established.

The next common misconception is regression of outcome variable against a dummy variable indicating that treatment and all other control variables will be sufficient to account for selection bias and partial regression coefficient of the dummy variable as an unbiased estimate of impact. However, in this scenario, the dummy variable for treatment is not exogenous (as the selection into either treated or control group depends partly on the observed control variables included in the model), which is a violation of ordinary least square (OLS) assumption. Also, if the selection of treatment and control depends on unobservable (like motivation), then the error term will be correlated with dummy variable which is again a violation of OLS assumption. In this case, the estimate of impact will be biased.

By this time, we have made our point clear that selection bias is inherent in observational studies and estimation without accounting for selection bias, which tends to be biased (over/underestimation). Next important question is what should/can we do to account for sample selection to minimize the bias? We would like to make one thing clear: if anyone tells you that some method will eliminate bias don’t trust them. Because, no method can completely eliminate bias, each of the methodologies that we discuss here have their own advantages and disadvantages. The purpose is to minimize the bias in estimates and make it as accurate as possible. Moreover, there is no statistical test to tell us the best method for a particular data set, unlike the Huassman test for selection between fixed effect model and random effect model in panel data regression (econometric juggleriesJ). So, the selection of method is left to the discretion of the researcher, who has to take a call based on the research question, size of sample, type of data and other factors.

There are different approaches available in literature which could help us in doing impact assessment. We will now briefly discuss these approaches.

IMPACT ASSESSMENT METHODS

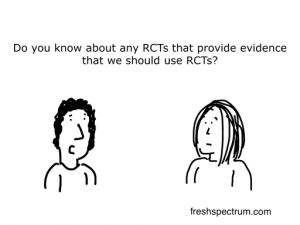

Randomized Control Trials: The gold standard of impact assessment

We hope you are clear by now that observation studies suffer from selection bias because of ‘non-random assignment’. What if can assign the units into either of the groups randomly? Or in other words, if the researcher has control over the treatment assignment, he could conduct an experiment where the treatment allocation is done randomly such that participation in the programme is independent of either observed or unobserved covariates. By definition, random allotment would mean that the treated unit and control unit are similar to each other on average and are comparable (we need to perform balancing test after randomization to make sure of this). Simple difference in mean outcomes across the group will be an estimate of impact. This looks simple on paper, however, it is difficult to implement in the field. This approach can be used only when the treatment allocation is under the control of the researcher. RCT needs to be planned before a programme/ intervention is implemented. Furthermore, in cases where there is possibility of spillovers, villages or clusters may need to be randomized. Even after taking care of all these things, the RCT method is criticized for lacking External Validity.

Source: https://designmonitoringevaluation.blogspot.com/2010/05/quotes-related-to-evaluation.html

This is an ideal approach for impact assessment, regretfully, most researchers won’t get the luxury of doing it. Most of the impact assessments are ex-post observational studies and for such cases quasi-experimental approaches are available. A few commonly used approaches are discussed below.

Heckman two step model for impact assessment

In this approach, in the first step, a selection equation is estimated to capture the probability that an individual belonging to a treatment group, is dependent on a set of observed explanatory variables. This is usually estimated using Probit regression. From this regression, we estimate expected value of a truncated normal random variable, commonly known in literature as Inverse Mills ratio (IMS) or Hazard function [technically speaking Inverse Mills ratio tell us the probability that an individual will be in a treated group (or beneficiary group) over cumulative probability of the decision. Which explain that part of the error term which captures the difference in outcome variables due to the selection and not the programme itself. Is it too much jugglery? Just ignore J]. In the second stage, outcome variable is regressed upon dummy variable for treatment, along with a set of control variables, including IMS as an explanatory variable to minimize the effect of endogeneity (In simple terms, endogeneity in this context implies that the participation in a programme is determined by a set of observed and unobserved variables and is not exogenous). However, the Heckman model is developed for improving the explanatory power of the model in special case where sample self-selection leads to truncated dependent variable and OLS estimates are biased. So, the Heckman model is not specifically developed to establish the causal relationship. Hence, whenever possible, it is better to use models which are developed specifically for establishing causation. If the choice of method is limited by the smallness of a sample, it is better to use Heckman model in addition to other simple methods, such as Regression Adjustment as robustness check.

Regression Adjustment

Another very simple method (at the cost of efficiency though) for measuring impact is Regression Adjustment. We will try to explain the method in the simplest terms (though at the cost of technical fineness). The Regression Adjustment model fits two separate regressions – for the treated and control units – and estimate the partial regression coefficients for all the control variables included in the model (dependent variable – outcome variable like income). In the next step, the model estimates ‘Potential Mean Outcomes’ (PMO). PMO is the average value of the outcome if all the units in the sample are either in treated or control. (For example, what would be the mean income in case all the units in our sample were to be a beneficiary of the programme?) The Regression Adjustment model first calculates the expected value of dependent variable for the entire sample based on coefficients of regression estimated on treated units. Mean of the expected value is termed as PMO of treated group. Similarly, expected value of dependent variable for the entire sample based on coefficients of regression estimated on control units is used to estimate PMO for control units. The difference between PMO of treated and control groups is considered as estimate of impact. Again, a word of caution, the Regression Adjustment method is very sensitive to functional form of the outcome equation and model specification. In many cases, the estimate of impact changes drastically with addition/deletion of a control variable indicating model dependency leading to bias. In spite of these limitations, RA can be used as a method to assess impact, particularly when the size of sample is not large enough for semi-parametric matching methods, such as Propensity Score Matching.

Propensity Score Matching

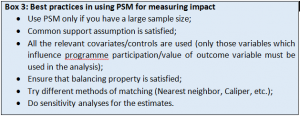

Another very popular and widely used (or should we say abused?) method for assessing impact is Propensity Score Matching (PSM). Earlier, we had explained that the problem in observational studies is that we don’t have a proper counterfactual for assessing impact because of non-random assignment of treatment. However, what if we can select units from the control group, which are similar to the treated units and construct a quasi-counterfactual group? PSM, and also many related matching methods, use the same logic for impact assessment. The objective is to find the counterfactual for each treated unit from the control group we have. Suppose, in a treated group we have a farmer with 10 acres of land, 15 years of experience, who belongs to OBC group, and similar data on many other variables. The matching methods try to identify one (or more depending on type of matching we use) farmer from the control group who is very similar to the treated unit with respect to all these characteristic features. If we want to match with respect to one character, it is fairly straightforward, however, as the number of control variables increase, matching becomes increasingly difficult. We call it ‘Curse of Dimensionality’. So, Rosenbaum and Rubin (1983) came up with a solution that if we can calculate ‘propensity score’ for each unit in the sample for each individual, which is a function of all the explanatory variables, then this propensity score can be used as a base for matching. This is as good as reduction of dimension, information on a set of control variables is captured in a single propensity score.

The propensity score is usually calculated based on logit or probit regression of treatment participation on a set of control variables. All those control variables which can impact either programme participation or the outcome should be included in the model. Once the propensity scores are calculated, the treated units are matched with the control units having similar propensity scores. The mean of difference in outcomes between treated and control units within each matched pair is considered as estimate of impact. (The basic logic is that the treated unit and the control unit in a matched pair are very similar to each other with respect to all covariates except for treatment. So observed difference in outcome is directly attributed to the treatment.) But before that we need to make sure that the propensity scores are good enough to achieve matching on the control units we have used for estimation. For this, the entire data is divided into different strata based on the value of propensity scores. Remember the basic assumption – the matching method will work if, and only if, observations having similar propensity scores also have similar values of control variables (on an average). This needs to be tested using a balancing test. Further, there could be a chance that many of the treated units have propensity scores for which no control variables are available for matching, which is termed as ‘lack of common support’.

Of late PSM has received a fair share of criticism due to some serious drawbacks. We won’t discuss all of these in detail, however, a few of them are worth mentioning here. PSM being a semi-parametric method, needs a bigger sample size to achieve proper matching and subsequent reduction in bias (so avoid using PSM for small samples). Secondly, PSM is centered on the assumption that selection bias arises due to observed variables (like age, education, caste, etc.) and not on unobserved characters (like motivation). So, if there is selection bias due to unobservable factors, PSM suffers from ‘hidden bias’.

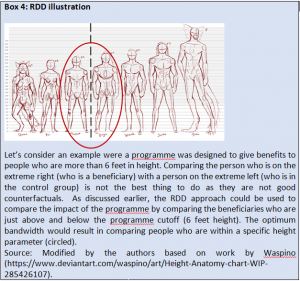

Regression Discontinuity Design

Regression Discontinuity Design (RDD) is a quasi-experimental approach of impact assessment just like PSM. RDD suits a situation where the probability of assignment in a treatment changes discontinuously with some forcing variable (Continuous variable). For instance, a particular programme is designed exclusively for small farmers. Here land cultivated by a farmer is the forcing variable and a farmer who has less than 2 hectares of land is automatically enrolled in the programme as beneficiary. So, ‘land cultivated’ becomes the forcing variable and 2 ha becomes the cutoff point. The key assumption is that the discontinuity design creates a randomized experiment around the cut-off value of the forcing variable. (In simpler terms, a farmer who has 2.1 ha of land, who is not a beneficiary of the programme, is a good counterfactual for a farmer who has 1.9 ha of land who is a beneficiary of the programme). In other words, the units which are near to either side of the cutoff (to both left and right of cutoff point) are good counterfactuals for measuring impact and only those units will be used to measure impact (see Box 4 for illustration). Well, how far must the units go to be included in the model? To decide this, optimum band width is selected (distance of units from the cutoff to be included in the model) based on selected optimization criteria. Then amongst the selected units, find the good counterfactuals, average treatment effect is usually estimated by fitting two separate regression functions (one to left of cutoff and one to the right). The biggest advantage of RDD is that the jump in regression line at the point of cutoff can be easily visualized. However, this method is criticized for using only the observations that are close to cutoff, leading to loss of information. It is also important to note that the method needs a large sample size.

Difference in Difference method

Another popular method of impact assessment is Difference in Difference estimator. If you remember, we had said earlier that before and after comparisons suffer due to the ‘trend effect’ – many things (variables from methodology perspective) change over a period of time and observed change in impact cannot be attributed to programme participation. However, DID assumes that average change in income (or any outcome variable) due to ‘other factors’ or ‘trend’ would be the same across treated and control group. In other words, average difference in income before and after the implementation of the programme in the control group is due to ‘trend effect’ as they have not received the treatment. So, by subtracting the average difference in income before and after the implementation of the programme in the treated group from that of the control group (which capture trend effect), can we get the estimate of impact? As you might have noted already, DID rests on one very crucial assumption: DID assumes that average change in income (or any outcome variable) due to ‘other factors’ or ‘trend’ would be the same across treated and control group. (On a technical note, this means ‘the variables that affect the value of outcome other than treatment are either time invariant or the time varying variables are group invariant. Confusing isn’t it?) This holds true, if and only if, the income in treated and control group moves parallelly in the pre-treatment period. This we call as ‘parallel trend assumption’, which needs to be tested before using DID. If the parallel trend assumption is violated, we may have to use matching methods before using DID. Also, if there is spillover effect of a treatment, then the DID estimates may be biased.

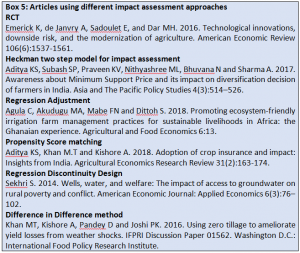

We are providing one selected paper for each of the approaches discussed below for you to read and understand (Box 5).

Apart from these methods, there are many other methods/approaches for impact assessment. Some of the others worth noting are: Inverse probability weighting, Inverse probability weighting regression adjustment, Endogeneity Switching Regression, PSM-DID, Coarsened Exact Matching (CEM), Instrumental Variable technique and synthetic control.

IMPACT PATHWAYS AND THEORY OF CHANGE

Though the objective of the blog is to highlight the need for, and methods of, impact assessment, it would be incomplete if we forget to mention the concept of impact pathway. The first step of any impact assessment exercise has to be development of impact pathways. Impact pathways are developed based on theoretical expectations regarding the expected outcome of a project and various pathways through which the impact is manifested. Impact pathways are developed based on ‘theory of change’- the process through which the changes occur, leading to long term desired changes. Let us take a simple example of women’s participation in SHGs. Participation in SHG activities, such as training and sharing information among participants, helps in increasing knowledge and skill sets. This might lead to a few women going on to explore entrepreneurial options like vegetable cultivation or kitchen gardens, leading to higher incomes. In turn, higher income can empower these women. Empowerment of women can then be linked to better nutritional and health outcomes. Such an impact pathway acts as a guide for conducting impact assessment. Assessing impact without impact pathways is akin to what George Fuechsel says, “Garbage in, garbage out!”

TO CONCLUDE

As discussed earlier, through this blog we intended to orient readers on the need for impact assessment and to introduce a few methods of impact assessment. The discussion on each method is driven by the principle of parsimony: the simpler is better than the better. However, we acknowledge that, in our endeavor to simplify, we might have missed out on a few technical things. The blog is only for understanding the basic principles behind each of the methods rather than to acquaint readers with the details on how to use it. As you are aware, by this time, there are many methods to measure impact. Each has its own premise, set of assumptions, advantages and disadvantages. Researchers must cautiously choose the right method after in-depth understanding of the research question, method and data availability. A detailed review of each method should be done so as to understand the ‘good practices’ and ‘robustness checks’ that each method demands. Moreover, reading recent literature is always advisable as Impact Assessment is an ever-evolving field where new methodologies/modifications and post-estimation tests for existing methodologies are developing at a rapid pace.

References

Rosenbaum PR and Rubin DB. 1983. The central role of the propensity score in observational studies for causal effects. Biometrika 70(1):41-55.

Thaler Richard H and Sunstein CR. 2009. Nudge: Improving decisions about health, wealth, and happiness.

Aditya KS, Scientist, Division of Agricultural Economics, ICAR- Indian Agricultural Research Institute, New Delhi. Email id: adityaag68@gmail.com

Aditya KS, Scientist, Division of Agricultural Economics, ICAR- Indian Agricultural Research Institute, New Delhi. Email id: adityaag68@gmail.com

Subash SP, Scientist, ICAR-National Institute of Agricultural Economics and Policy Research, New Delhi. Email id: subashspar@gmail.com

Congratulations to young scientists Aditya and Subash for this informative blog on impact assessment. It will be quite useful to those who wish to conduct impact studies and to those who wish to submit project proposals for funding. It covered a lot on economic impact with little focus on social impact. The authors could have referred the blog 48 of Dr.Sethuraman Sivakumar “ New advances in extension research methodologies – part 1 published by AESA.

Very useful information

Excellent blog! Timely, relevant and much-needed!

Congrats to Aditya and Subash. The methods discussed in the blog measures the impact in terms of economic indicators.I think impact of extension interventions goes beyond that

Sajesh VK exactly sir, I also read the blog and felt the same. But it can be used with some modifications based on our need.

Thanks for sharing a very useful and timely blog. In almost layman’s language the dry and least bothered boring subject is dealt using analytics. Congratulations to authors who are excelling in the profession silently. Let many more such blogs come from them and others to enrich the understanding of universe and its mysteries

“Thanks for sharing Blog 104 on Impact Assessment – while it is very informative but is complex for me and I seek some clarification.

I have taken due note of the caution indicated by the authors in para on ‘Background’ that ‘ there is no ‘gold standard’ method, best fit for all cases’ I ask clarification/more information with the hope that experienced / knowledgeable persons like you, Dr. SVN Rao and the authors can provide information and guidance and may be a budding scientist like Dr. Rajalakshmi can do some literature search (copying my mail to them).

I repeat that my comments/questions seeking clarifications are those of a development person (who keeps trying to learn) and not from a specialist in ‘Impact Assessment’.

I get an impression that ‘Discussion started with Why and What is left out’ (may be I am missing something and stand corrected). The reason for stating this point is in view of my ‘Bias’ towards assessing benefit/satisfaction as perceived by Small Holder Resource Poor Producer and not for assessment as desired by Homo economicus or Homo planner = most methodologies seem to ‘aim at assessing impact for these two and not as perceived by ‘Small Holder, Resource Poor Rural family’. I seek information information whether there are ‘Improved and Easy to use methodologies’ to assess impact as perceived by these families. Commonly used Participatory exercises/methodologies in most cases do not provide real picture (lack of desired participation of rural poor/leading questions). And if the development project is aimed at improving livelihood of these producers (the needy group and major contributor to our Food Basket) should we not have clarity as to how they perceive benefit?? To cite an example = in most cases we the technical persons (including assessment experts) take Reductionist/Commodity approach – intervention is focused on improving its production and income from a single commodity (grains/ fruits/milk) and impact is assessed while most small holders follow mixed farming and their livelihood depends on income from Whole Farm. It is likely that interventions focused on one Sub-system may have negative impact on the other sub-systems.

IMPACT PATHWAYS AND THEORY OF CHANGE – I am particularly pleased about this sub-section, however, I did not find discussions on crucial of – ‘Sustainability of the benefit after the project support is over/withdrawn’. Through extended involvement in rural livelihood development programs I learnt that it is crucial to watch impact/adoption of recommendations/technologies over an extended period and consider ways of sustaining development after the project period is over (‘Need for Exit Policy’ ). I found very few assessments undertaken on a long term basis – a few years after the project is over. For small holders factors like ‘Ease of operations, Less Dependence on outsiders/agencies, Low External Inputs etc.’ influence adoption of technologies or recommendations on a long term basis. I presume there are methodologies to take care of these aspects and provide clear picture of ‘ Sustainable Benefit’.

I hope you and the authors will find time to provide easy to understand information on aspects mentioned above” .

Response to comments of Dr. Rangnekar on our blog What, Why, and How to do Impact Assessment.

“At the outset we would extend our warm regards to Dr. Ragnekar for reading our blog and taking time to share his detailed feedback. We have tried clarify the issues raised and we hope it would also help other readers.

COMMENT 1: Informative but complicated

Authors response: Thanks for the compliment and sorry for the inconvenience. We acknowledge that the blog, at times is bit technical and complicated. But the blog is intended for academic audience and we believe that it would intrigue the readers to explore some background on impact assessment methods. Please refer an basic method “Khandker, Shahidur R.; Koolwal, Gayatri B.; Samad, Hussain A.. 2010. Handbook on Impact Evaluation :Quantitative Methods and Practices. World Bank. © World Bank. https://openknowledge.worldbank.org/handle/10986/2693

COMMENT 2: Discussion started with Why and What is left out

Authors response: The point of the paper was to explain Why to use “impact assessment methods”, and when to use a particular method. First few paragraphs of the blog are exclusively dedicated for the purpose of explaining why simple difference of mean across beneficiary and non-beneficiary is not impact. Coming to the point of what, we agree that we did not discuss much on the outcome variable or what should be an ideal outcome for assessing impact. The reason is, the outcome variables are rather specific to the intervention under consideration and very difficult to generalize.

For instance, if you are trying to measure the impact of credit on farmers, what should be the outcome variable? Most studies use ‘income’ as outcome. But there are counter arguments that income is not the best measure, as income from farming to large extent depends on weather and there will be year on year variations. Instead, assets or consumption expenditure is a better outcome. However, if we want to know the poverty alleviating effect of credit, then income is an ideal outcome. In summary, the choice of outcome depends on what intervention are we talking about and what are the expected outcomes and what we would like to measure. These things are to be decided by the researcher and we fear that these are not generalizable.

COMMENT 3: Assessing benefit/satisfaction as perceived by Small Holder Resource Poor Producer.

Authors response: To begin with, we would like to add that the methodologies discussed in the blog are meant for objectively quantifying the impact of intervention (Quantitative approach) and not for analyzing perception of stakeholders. By ‘objective assessment’ we mean that these methods estimate how the outcome variable changed due to intervention irrespective of what homo economics/planner wished for. Moreover, if the researcher expects that the benefits of intervention are heterogenous across small and large farmers, this can always be addressed through econometric methods. This can be tested and even estimated separately. Many qualitative tools can also be used for the purpose.

COMMENT 4: Easy to use methodologies’ to assess impact as perceived by these families

Authors response: Unfortunately, there is no universal recommendation regarding best methodologies. Secondly, all the methods are simple if we understand the principle behind them.

COMMENT 5: If the development project is aimed at improving livelihood of these producers (the needy group and major contributor to our Food Basket) should we not have clarity as to how they perceive benefit??

Authors response: It is absolutely correct that if the intervention is targeted for poor households, then, we should measure the impact only on poor households. The population for the study from which sampling is to be done should be restricted to poor households unless we are interested in measuring the spill-over effects. If we are interested in estimating the effects separately (across poor and rich), we could always do that econometrically.

COMMENT 6: Most small holders follow mixed farming and their livelihood depends on income from Whole Farm. It is likely that interventions focused on one Sub-system may have negative impact on the other sub-systems?

Authors response: This is precisely the intuition behind highlighting the need of impact pathways. For example, let us assume that the intervention is seed of new variety. First year, farmer use the seed and gets higher yield. This might incentivize the farmer to use more fertilizer in the subsequent year. So, the outcome variable for second year should also include the input cost and not only yield. Further, due to increase in income of one enterprise, farmer might use more inputs in subsequent crop as well. Once a researcher has developed such impact pathways, it is easy to choose which outcome variable to consider for impact assessment. For example see paper by Emerick et al, 2016, where the authors examined how a new rice variety can reduce risk by providing flood tolerance increase adoption of a more labor-intensive planting method, area cultivated, fertilizer usage, and credit utilization. (Emerick, K., de Janvry, A., Sadoulet, E., & Dar, M. H. (2016). Technological innovations, downside risk, and the modernization of agriculture. American Economic Review, 106(6), 1537-61)

COMMENT 7: Sustainability of the benefit after the project support is over/withdrawn

Authors response: You are very correct in pointing out that the sustainability of the intervention is important. We didn’t discuss this issue as it is more relevant when we are dealing with specific set of policy interventions. You might wish to have look at Poverty Action Lab website for few such studies (https://www.povertyactionlab.org/policy-insights). We don’t think that there is a need for a dedicated methodology to measure the sustainability. A comparative measure of impact when the intervention was there and after it is withdrawn will serve the purpose. Or even a comparison of outcomes across place where the intervention where the intervention is continued with that of place where it is discontinued, after matching or some other methodologies can also give indication on sustainability.

ANOTHER GENERAL COMMENT ABOUT THE BLOG: (Posted on the website by Srajesh VK and Shafi Afroz) : The methods discussed in the blog measures the impact in terms of economic indicators.I think impact of extension interventions goes beyond that.

Authors response: We are happy to disagree at this point. Though most of the examples quoted are from economic perspective, the methods perse wont change across economics and extension for that matter. In fact few methods that we have discussed are not originally used by economists. To quote one example, RCT is more common in medical research, than in economics. Same is the case with Propensity Score Matching. Coming to extension interventions, the methods of impact assessment remains valid and only the outcome variables change. In addition, there are many qualitative tools, which are equally useful. But we disagree on the point that the methods highlighted in the blog cannot be used for measuring impact of extension interventions.”

Hide or report this

Handbook on Impact Evaluation : Quantitative Methods and Practices

OPENKNOWLEDGE.WORLDBANK.ORG

Handbook on Impact Evaluation : Quantitative Methods and Practices

It is interesting to see an intellectually stimulating discussion is in progress on the blog content. This is a healthy sign when scholars/professionals read & get engaged on issues. I liked this blog and discussion on it because it help improve our understanding of the complex matter like social science research. There is difference between extension researchers &Agrl. Economists has been focussing mostly on quantitative analysis, while qualitative methods mostly have been used by extension researchers.Also, In extension, we have largely revolved around before n after type of research. Of late, there is tendancy among extension researchers to follow what economists are doing. For example, to compare, we are now trying to employ PSM. Agril. Economists too are evolving since PSM too have been adopted by them from other fields not too long ago. Somehow this is linked with possibilities of publishing in high rated journals too. If we use methodologies with mathematical modelling (which look complex to extensionists), chances are there for publishing in high rated journals. Congratulations to authors, AESA & thanks to those have commented as it is good for better understanding n growth of our discipline.